I live and work in two different cities; on the commute, I continuously ask my phone for advice: When’s the next train? Must I take the bus, or can I afford to walk and still make the day’s first meeting? I let my phone direct me to places to eat and things to see, and I’ll admit that for almost any question, my first impulse is to ask the internet for advice.

My deference to machines puts me in good company. Professionals concerned with mightily important questions are doing it, too, when they listen to machines to determine who is likely to have cancer, pay back their loan, or return to prison. That’s all good insofar as we need to settle clearly defined, factual questions that have computable answers.

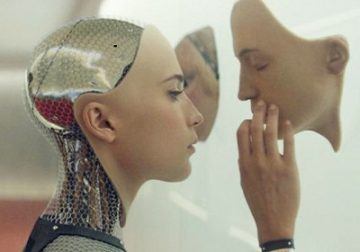

Imagine now a wondrous new app. One that tells you whether it is permissible to lie to a friend about their looks, to take the plane in times of global warming, or whether you ought to donate to humanitarian causes and be a vegetarian. An artificial moral advisor to guide you through the moral maze of daily life. With the push of a button, you will competently settle your ethical questions; if many listen to the app, we might well be on our way to a better society.

Concrete efforts to create such artificial moral advisors are already underway. Some scholars herald artificial moral advisors as vast improvements over morally frail humans, as presenting the best opportunity for avoiding the extinction of human life from our own hands. They demand that we should take listen to machines for ethical advice. But should we?

Continue reading

No comments:

Post a Comment